The Superforecasters

How a college-based team of students, faculty and volunteers bested government intelligence community prognosticators and gave birth to Superforecasting

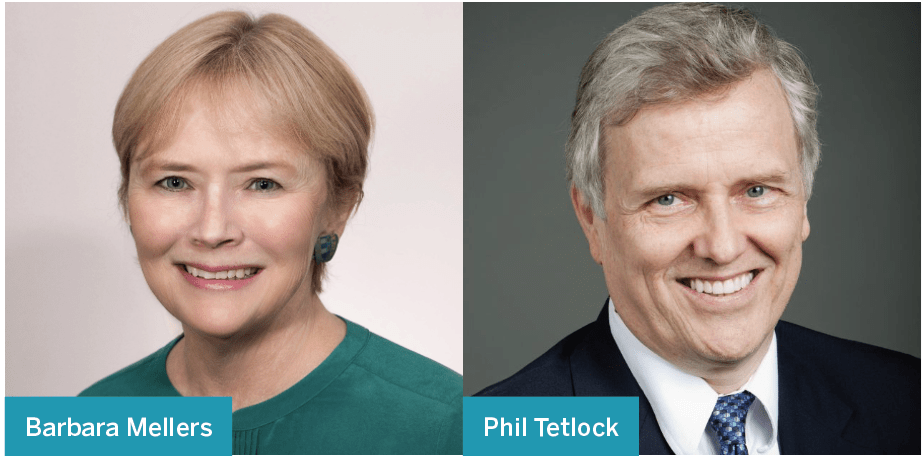

A husband-and-wife team of scientists became giants in the field of forecasting by creating and overseeing the Good Judgment Project, a massive and lengthy academic inquiry into how to train predictors and improve their predictions. In the process, they coined and popularized the term “Superforecasting” and spawned a company that provides forecasts for businesses and governments.

“The story started in the summer of 2009,” recalls Barbara Mellers, one half of the research duo. “My husband, Phil Tetlock, and I decided to apply for a contract with IARPA.” That’s the government’s Intelligence Advanced Research Projects Activity, an entity she describes as “the little sister to DARPA,” the much larger Defense Advanced Research Projects Agency.

“We put this grant together as a chance to work together on something that interested both of us,” notes Mellers. She and her husband both teach psychology, and they’re both cross-appointed at the University of Pennsylvania to the School of Arts and Sciences and The Wharton School.

They were seeking funds that IARPA awards to projects of interest to the intelligence community. Unlike DARPA, which tends to keep its research classified, IARPA shares some of its findings with the public, which fits with the mission of academia. But a slight misalignment arose when IARPA took three or four months to notify Mellers and Tetlock that their application for a project had been accepted.

The delay made things a little “awkward” as Mellers remembers it. By then, She and Tetlock were leaving the University of California at Berkeley to assume their current positions at Penn. Some of the funding had to filter through UC Berkeley, so the project initially included five or 10 students and faculty members from that school under the direction of Prof. Don Moore. Mellers and Tetlock recruited 20 or 25 students and faculty from Penn, as she tells it. Contingents from Rice University, Hebrew University and other institutions later joined the project.

Tournament of forecasting

They were working on what IARPA calls an ACE tournament, which stands for Aggregative Contingent Estimation. Think of it as five university-led teams competing to come up with the best probabilistic geopolitical forecasts from a large, dispersed crowd of volunteers.

The project got underway in 2011 and continued until 2015. After the second year, IARPA eliminated funding for all of the university groups other than the Mellers and Tetlock contingent from Penn. They were declared the winners and for another two years IARPA continued to help fund their research as they competed against control groups and intelligence analysts while also attempting to match or surpass benchmarks.

Superforecasters come from all walks of life—one worked as a plumber and another as a professor of mathematics.

Meanwhile, IARPA set up a prediction market inside the intelligence community. It operated on two levels, depending upon the security clearances of the participants. That meant the university group and the intelligence analysts were basing predictions on the same questions, but the latter had more information because of their security clearances. Just the same, the Good Judgment Project Superforecasters were 30% better than the intelligence analysts. “That was the good news and the bad news,” Mellers wryly suggests.

But how did the team from Penn achieve that success? Strength in numbers helped. “We recruited more people than the other teams,” Mellers says. “We had better access to people who were able to get us volunteers.”

By the numbers

In the first and second years, the Good Judgment Project pooled the wisdom and prediction prowess of between 3,000 and 4,000 volunteer forecasters. Near the end of the third year, Tetlock and Elaine Rich, a pharmacist the project had identified as a Superforecaster, sat for an interview on All Things Considered, the National Public Radio show.

Rich explained on the show how her forecasting hobby had blossomed, and her story inspired 20,000 volunteers to sign up for the fourth year of the project. But many balked upon finding how burdensome it was to assemble the knowledge necessary for accurate predictions, and the assemblage of forecasters narrowed to 5,000 or so by the end of the final year.

Each year, the project analyzed the performance of each forecaster and assigned them scores, recognizing the top 2% as Superforecasters and directing them to work in teams during the remaining years of the program.

“That was our best, strongest intervention,” Mellers says of choosing the Superforecasters and forming the teams. “That was like putting these people on steroids. They were really hard workers, but they worked super-hard when they were together to help each other and do well and compete. It brought together all the things they like to do.”

Ten to 15 people participated on each team, and they collaborated via internet chat rooms. “They corrected each other’s mistakes, shared articles with each other, motivated each other,” Mellers notes. “They debated the logic of certain rationales, and they just went to town. It was just remarkable.”

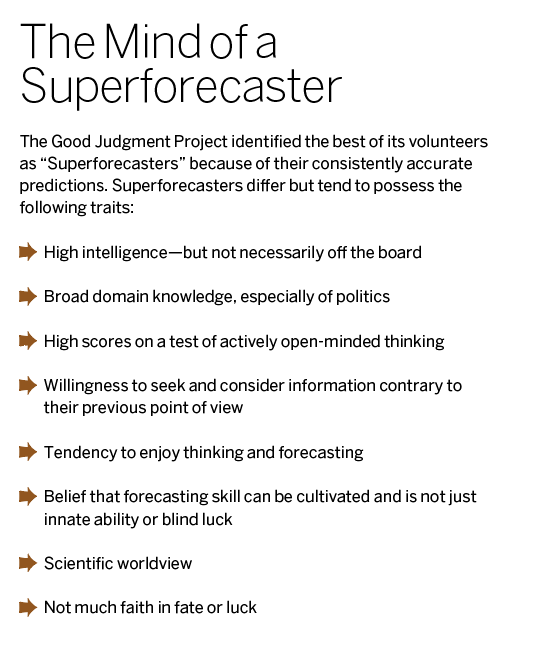

The Superforecasters came from all walks of life—one worked as a plumber and another as a professor of mathematics. Many programmed computers or were employed in other tech-related fields. Whatever their occupations, they all possessed analytical minds (see “The Mind of a Superforecaster,” right).

New beginnings

Although the IARPA project that gave birth to Superforecasting has ended, the work of the Superforecasters is continuing. Some have formed relationships with Good Judgment Inc., a company spun off from the project to serve clients in the public and government sectors (see “Getting Predictions Right,” below).

Meanwhile, Tetlock and Mellers and some of the Superforecasters have formed the Good Judgment Project 2.0 to compete in another IARPA tournament, one that’s exploring counterfactual forecasting. They consider what would have happened if history were changed—if Hitler had been born a girl, for example.

“The goal is to help people think about how we can learn from history,” Mellers says. “We do it by intuition and make counterfactual forecasts.” They test the accuracy of counterfactual forecasts by comparing them with simulated games.

Both of the tournaments serve a higher purpose, according to Mellers. “You find out very quickly what works and what doesn’t work,” she says of the competitions. “It’s a fantastic way to speed up science.”

Getting Predictions Right

The U.S. intelligence community failed to foresee the 9-11 attacks and erroneously predicted that Iraq possessed weapons of mass destruction. The time had come to find better ways of using the wisdom of the crowd for forecasting.

That’s when intel officials hit upon the idea of staging a tournament to challenge academic minds to develop better ways to foretell the future. One of the competing teams—a University of Pennsylvania group called the Good Judgment Project—rose to the challenge and may have changed history.

Under the leadership of Profs. Barbara Mellers and Phil Tetlock the Penn group discovered and then pursued Superforecasting.

The Super 2%

Meet some of the Superforecasters who emerged from the Good Judgment Project.

Dominic Heaney

45, Southeast England

Occupation: Editor in academic reference publishing

As a forecaster are you a skilled generalist or an industry specialist? A bit of both. I’ve done a lot of work on geopolitical forecasting, particularly with regard to the regions I cover in my main job.

How do you typically use your forecasting skills? I’ve definitely assimilated the art of thinking—in any number of areas, personally and professionally—in terms of “this event has an X% chance of happening,” and writing off things or possible courses of action that are non-starters.

What advice would you give to aspiring Superforecasters? Think outside the box, look around arguments, consider base rates as a strong guide and don’t be afraid to be considered contrarian or unconventional if you are well-informed and pay the respect due to the devil’s advocate positions.

Welton Chang

37, Washington, D.C.

Occupation: Chief technology officer

How and when did you become a Superforecaster?

I got a note from my old undergrad advisor about a tournament being held to gauge political forecasting accuracy. I jumped at the chance. About a year later, I got a note back from the folks running the tournament that my scores were in the top 2% of all of the forecasters. Two years later, I joined the Good Judgment Project as a grad student researcher working directly with Barb Mellers and Phil Tetlock.

How do you typically use your forecasting skills? These days, I generally think about improbable but highly impactful events—not quite “black swans,” but more in the gray-colored territory.

What advice would you give to aspiring Superforecasters? Examine your sources and assumptions before making any forecasts. And when possible, dive deeply into any topic you’re not familiar with.

Shannon Gifford

62, Denver

Occupation: Deputy chief projects officer for the Denver mayor’s office

When and how did you become a Superforecaster?

I became a Superforecaster after the first year of IARPA’s ACE, or Aggregative Contingent Estimation Project.

How do you typically use your forecasting skills?

The practice of forecasting has made me less likely to jump to conclusions of all kinds and more likely to listen carefully to multiple points of view. It also has caused me to read and think about the news in a much more active way, with much more thought about the downstream consequences of current events.

What advice would you give on Superforecasting?

Keep an open mind and be willing to change it, update your forecasts regularly, try to be aware of your biases and set them aside, listen carefully to people you disagree with, and try to maintain humility, especially when you’re forecasting an issue or a question in an area you think you know well.