2025 Tech Investment Theme: Surge in Demand for Micron and the High Bandwidth Memory

High bandwidth memory is in short supply, and Micron looks well-positioned to capitalize

- Demand for high bandwidth memory (HBM) has surged, placing manufacturers in a prime position to capitalize on favorable market conditions.

- Micron’s new partnership with Nvidia secures its position as a critical player in HBM, and it will power the next generation of GPUs for AI and gaming.

- With strong prospects, an attractive P/E ratio and bullish analyst ratings, Micron offers a compelling valuation proposition.

As the world comes to rely on artificial intelligence and other high-performance computing technologies, the demand for faster, more efficient memory has never been more critical. At the heart of this transformation, high bandwidth memory (HBM) is revolutionizing the way data is processed.

HBM is changing AI applications by offering unprecedented speed and performance to meet the growing needs of machine learning, deep learning and beyond. But there aren’t enough HBM companies which leaves Micron (MU) well-positioned to capitalize on this fast-developing market.

The importance of memory in modern computing

Memory technology, the silent powerhouse behind every modern computing device, enables them to think, process and perform. It’s the bridge connecting the raw power of processors with oceans of data—whether running complex applications, analyzing real-time information or managing massive datasets. Without efficient memory, the intricate tasks we take for granted would grind to a halt.

The rise of data-intensive fields such as artificial intelligence, machine learning and high-performance computing has amplified the need for faster, more efficient memory.

Enter high bandwidth memory (HBM), an innovation that’s transforming how data is processed and managed, delivering the speed and capacity necessary to power the most demanding applications.

HBM tackles a critical challenge in modern computing: the need to process vast amounts of data faster than ever before. Its vertically stacked architecture, introduced by SK hynix in 2013, enables multiple memory chips to work in tandem, which accelerates data transfer. This bold design sets HBM apart from other memory solutions like dynamic random access memory (DRAM), which, despite its long-standing role in computing, falls short in handling the speed and power demands of today’s rapidly evolving use of data.

High bandwidth memory (HBM) addresses this challenge by offering significantly greater bandwidth, thus enabling faster data transfer. G. Dan Hutcheson of TechInsights described it this way: “Think of HBM as the autobahn for data. Its high bandwidth ensures data flows smoothly, avoiding bottlenecks that could slow AI processing.” The resulting efficiency has made HBM an essential component in modern chips. It’s becoming indispensable for AI model training and high-performance computing (HPC).

Surging HBM demand drains 2025 inventories

Strong demand for advanced memory has pressured HBM makers, resulting in industry-wide shortages. For instance, SK Hynix (000660.KS), the world’s leading producer of HBM, has already allocated most of its available supply for 2025. Similarly, Micron’s output for 2025 is also largely sold out.

Samsung (SSNLF), the second-largest player in the market, has faced its own supply constraints, although it has been less transparent about its specific inventory levels. However, its challenges aren’t limited to supply issues; reports have pointed to concern about the performance of its HBM chips, particularly regarding overheating and excessive power consumption during tests conducted by Nvidia. These issues have raised questions about Samsung’s near-term prospects in the HBM market.

The ongoing shortages underscore the immense pressure on companies to meet rising demand for AI-capable hardware. GPUs, which rely heavily on high bandwidth memory to maximize their performance, are in high demand, particularly for AI model training. Without HBM, GPU manufacturers like Nvidia (NVDA) and Advanced Micro Devices (AMD) are unable to assemble their high-end cards because the memory must be integrated into the chip package during manufacturing.

“The shortage has put a major strain on smaller companies trying to enter the market,” said Jim Handy, principal analyst at Objective Analysis. “The major players—like Nvidia and AMD—are securing the bulk of the available supply, while smaller players are left scrambling for scraps.” As a result of this dynamic, the HBM shortage is expected to weigh on GPU production.

The challenge of meeting surging demand is exacerbated by the complexity and cost of manufacturing HBM. Unlike standard DRAM, which can be easily adjusted in production lines, HBM requires a highly specialized process known as through-silicon vias (TSVs), a method not used in any other type of chip production. As a result, the ramp-up of HBM production is slower and more resource-intensive. “Industrywide, HBM3E consumes approximately three times the wafer supply as DDR5 to produce a given number of bits in the same technology node,” according to Micron CEO Sanjay Mehrotra. Based on those challenges, HBM shortages are expected to persist into 2026.

HBM competition

Three players dominate the global HBM market: SK hynix, which controls approximately 50% of the market; Samsung, holding around 40%; and Micron, which accounts for about 10%. Despite Micron’s smaller share, the company is strategically positioning itself to capture a larger slice of the market, with plans to grow its share to 20%-25% by 2025 or 2026.

Analysts expect the global market for HBM to double in size this year. “Compared to general DRAM, HBM not only boosts bit demand but also raises the industry’s average price,” said a TrendForce report. This growth has led to a tightening of supply, as manufacturers like SK hynix, Samsung and Micron work to ramp up production to meet the escalating demand.

Micron is hoping to use the current shortage to its advantage—rapidly scaling its production capacity in hopes of increasing its market share. Along those lines, the company plans to ramp up production of its HBM3e chips, which have been in high demand because of their crucial role in AI and high-performance computing applications. Micron’s management has outlined plans for new capital investments to support this expansion, with projections indicating HBM will make up around 6% of its DRAM bits by 2025, up from 1.5% in 2023. As part of the strategy, Micron expects its HBM total addressable market (TAM) to grow from approximately $4 billion in 2023 to over $25 billion in 2025.

While SK hynix and Samsung still dominate HBM, Micron’s recent advances suggest it could close the gap in the coming years. “HBM is a critical enabler for AI model training, and Micron is poised to capture a substantial share of this growing market,” said Jim Handy, general director of Objective Analysis.

Micron: strong growth, strengthening prospects

Micron’s narrative is gaining momentum, bolstered by its work in AI and gaming, particularly through HBM.

In early 2025, Micron’s fortunes took a positive turn with the announcement of a new partnership with Nvidia. As part of the pact, Micron has been chosen as a key supplier for Nvidia’s next-generation RTX 50 GPUs, including the highly anticipated Blackwell chips.

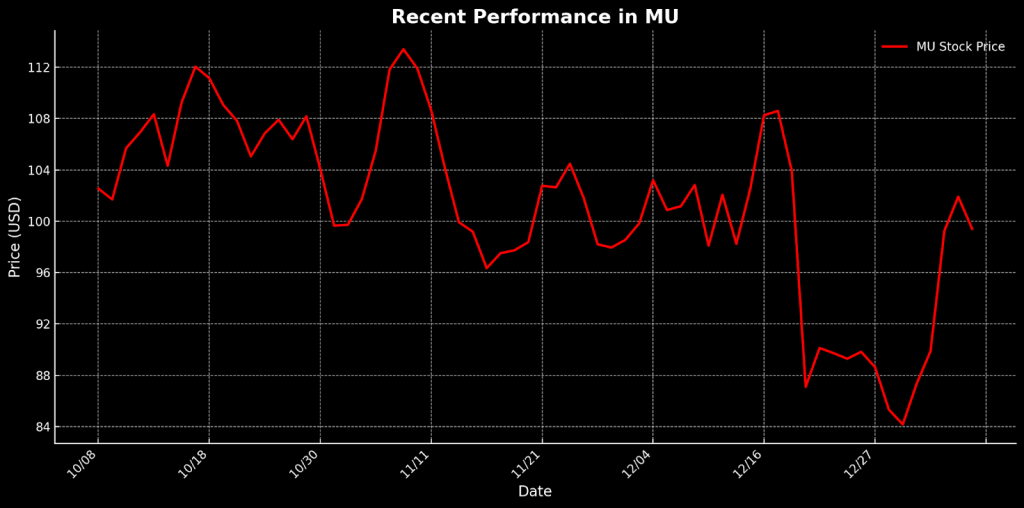

This partnership is positioning Micron’s HBM as an essential piece in Nvidia’s AI and gaming technology puzzle—two sectors experiencing robust growth. Following the announcement, Micron’s stock jumped by 6%, a clear signal the market is recognizing the company’s critical role.

Micron’s most recent earnings report highlights a strong upward trajectory. The company posted impressive growth in Q4, with revenue rising by 93% year-over-year. This surge was driven by the robust demand for its data center DRAM and HBM products, and Micron has forecasted even stronger revenue for the upcoming quarters. CEO Sanjay Mehrotra said the company is entering fiscal 2025 with its “best competitive positioning in history,” signaling a promising outlook driven by continued AI demand and industry-leading HBM offerings.

Besides an encouraging earnings trajectory, Micron’s valuation offers a compelling entry point for investors. With a forward P/E ratio of 17, well below the sector median of 31, the stock is arguably undervalued relative to its peers. This is supported by overwhelmingly positive sentiment from analysts—36 of 43 rate Micron’s stock as a “buy” or “overweight,” while only one rates the shares a “sell” or “underweight.” And as Micron continues to ramp up its HBM production to meet the rising demand for AI infrastructure, its market positioning arguably becomes even more promising.

Micron shares have surged nearly 19% in the last year, but with an average analyst target of $130 per share, the current price of around $100 suggests a clear path for additional gains. Despite its impressive recent performance, there’s still considerable room for Micron to rise as new, positive developments unfold. With analysts anticipating strong revenue growth and a favorable market backdrop, Micron appears to offer a compelling value proposition for bullish investors.

Andrew Prochnow has more than 15 years of experience trading the global financial markets, including 10 years as a professional options trader. Andrew is a frequent contributor Luckbox magazine.

For live daily programming, market news and commentary, visit tastylive or the YouTube channels tastylive (for options traders), and tastyliveTrending for stocks, futures, forex & macro.

Trade with a better broker, open a tastytrade account today. tastylive, Inc. and tastytrade, Inc. are separate but affiliated companies.