Q2 NVDA Earnings Preview: Why Nvidia Dominates in Artificial Intelligence

Here's a look at Nvidia’s competitive advantage in the AI Sector

- Nvidia released blockbuster earnings in Q1 that triggered a huge one-day move in not only the underlying shares of NVDA, but also many of its competitors.

- One of the biggest drivers of increased revenue and profitability at Nvidia was strong demand for AI-focused chips.

- Nvidia is scheduled to release its Q2 earnings report on August 23.

Nvidia currently has a stranglehold on so-called “AI chips,” which generally refers to any microprocessor that’s been optimized for machine learning.

However, Nvidia’s continued dominance in this niche is by no means guaranteed. And an understanding of Nvidia’s current competitive advantage helps illustrate where future challenges are likely to originate.

So what separates Nvidia from its competitors in the artificial intelligence (AI) industry? There are a couple of key differentiators, and they aren’t as complicated as one might think.

First, Nvidia produces the most advanced graphics processing units (GPUs) on the planet, and GPUs are the best-suited (of current offerings) for processing the oceans of data required to train and run generative AI models.

Back in 1999, NVIDIA launched its first GPU, called the GeForce 256. The GeForce 256 was marketed as the world’s first “graphics processing unit.” Prior to the introduction of the GeForce 256, graphics cards were typically referred to as “graphics accelerators,” or simply “video cards.”

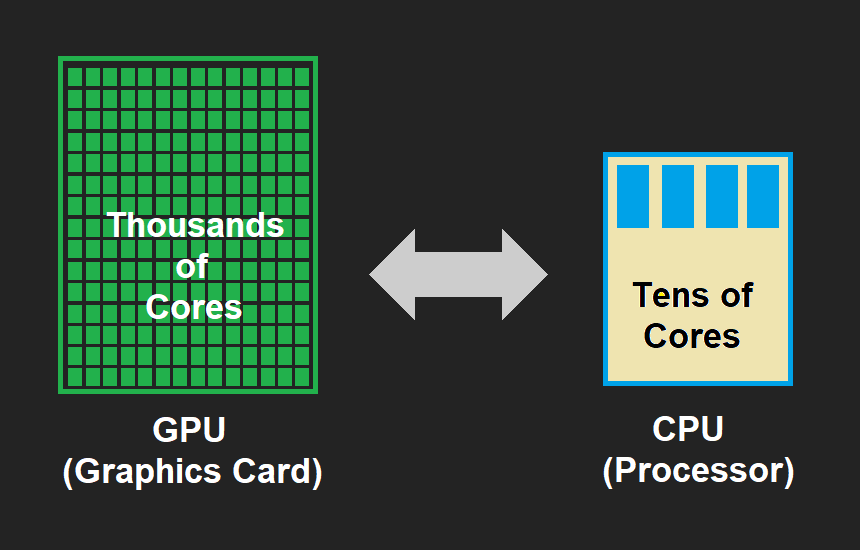

CPUs vs. GPUs

Then, about 13 years ago, some computer scientists experimenting with machine learning discovered that GPUs were better suited for AI-focused mathematics operations, as compared to traditional computer processing units (CPUs).

For example, Bill Dally—who is now the Chief Scientist at Nvidia—collaborated with one of his former colleagues from Stanford who was working on building a neural network at Google’s X Lab. The two believed that a small number of Nvidia’s GPUs could provide the same horsepower as thousands of CPUs, because they theorized that GPUs might be better-suited for this particular workload.

The experiment worked, and thousands of CPUs were successfully replaced by 12 Nvidia GPUs, proving that GPUs were better equipped for so-called “parallel processing,” which refers to the ability to execute multiple operations/tasks simultaneously.

Unlike traditional CPUs, which have a limited number of cores, modern GPUs have thousands of smaller, more specialized cores. And those cores are equipped to handle numerous operations concurrently.

Source: CpuGpuNerds.com

On top of the additional cores, GPUs also have a unique memory architecture that’s optimized for high-throughput, parallel operations. An architecture that spans local, shared, and global memory spaces which can be utilized to ensure the smooth flow of data for parallel processing.

Modern AI models

Modern AI models, especially deep learning models, can have millions or even billions of parameters. The architecture of a GPU—and its suitability for parallel processing—therefore allows it to efficiently and quickly execute the necessary operations.

For the time being, that means Nvidia’s dominance in GPUs has essentially led to its dominance in AI. But that’s not the only reason that Nvidia’s chips are so well-suited for this niche.

The other key reason that Nvidia dominates the AI space is because it offers a comprehensive AI ecosystem, which encompasses not only superior hardware (i.e. the GPUs), but also superior software.

More than 15 years ago, leaders at Nvidia recognized that the true potential of their GPUs could only be unlocked by providing developers with the right software tools, libraries and development platforms. As a result, Nvidia started hiring more software engineers and programmers, and transitioned into more of a hybrid hardware/software company.

Today, that means Nvidia is uniquely positioned in the industry, as highlighted by tech analyst Jack Gold, who wrote on VentureBeat, “While other players offer chips and/or systems, Nvidia has built a strong ecosystem that includes the chips, associated hardware and a full stable of software and development systems that are optimized for their chips and systems.”

And by bolstering their software ecosystem, NVIDIA basically helped ensure that their GPUs would become the de facto standard for AI research and development, which is exactly what’s come to pass. Today, most estimates indicate that Nvidia controls more than 80% of the market for AI-focused chips.

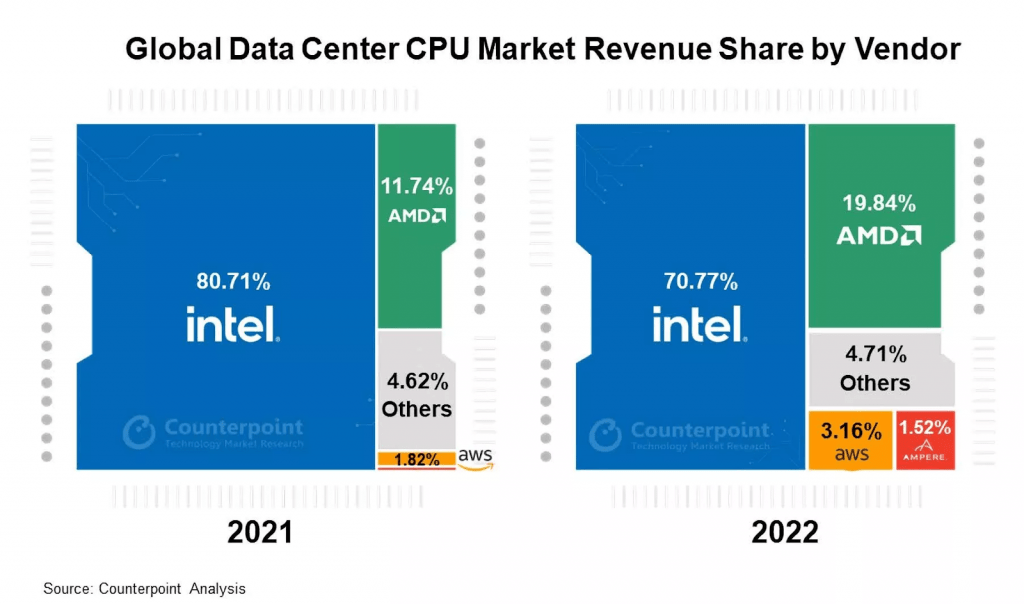

Speaking to the current AI landscape, Dan Hutcheson—an analyst at TechInsights—said of Nvidia’s current position, “What Nvidia is to AI is almost like what Intel was to PCs.”

Nvidia is undoubtedly the AI titan of today, but Hutcheson’s comments also illustrate the potential risks for Nvidia going forward.

As most investors and traders are well aware, Intel’s (INTC) grip on the traditional CPU market has slipped precipitously in recent years due to increased competition, particularly from Advanced Micro Devices (AMD), as highlighted below.

Importantly, however, AMD doesn’t just make CPUs, they make GPUs as well. And much like Nvidia, AMD has been one of the stock market’s top performers in 2023—shares in AMD are up more than 70% year-to-date.

AMD is expected to launch its first major AI-focused chip—the Instinct MI300—at some point in H2 2023. The MI300 features a hybrid CPU and GPU core, and will power the world’s fastest supercomputer—El Capitan—when it launches later this year.

AMD coming up fast

While it may take some time, AMD could eventually threaten Nvidia’s current monopoly in the AI sector, much like it did with CPUs and Intel.

Moreover, investors and traders need to be aware that the GPU isn’t necessarily the end-all-be-all in the future of AI. It’s entirely possible that another new chip—whether it be a CPU/GPU hybrid, or something else—will ultimately displace GPUs in the field of artificial intelligence.

Right now, the term “AI chip” refers to any processor that has been optimized to run machine learning workloads. In the case of Nvidia that’s a GPU.

But some argue that GPUs were designed specifically for rendering graphics—not machine learning—and have dominated the market thus far because they’ve been successfully adapted to the AI niche using complex (some might say cumbersome) software. Consequently, it’s possible that an entirely new AI-focused chip could one day displace the GPU.

Speaking about the unknown future, the CEO of Graphcore—Nigel Toon—told WIRED, “There are ideas, such as probabilistic machine learning, which is still being held back because today’s hardware like GPUs just doesn’t allow that to go forward. The race will be how fast NVIDIA can evolve the GPU, or will it be something new that allows that?”

At the end of the day, AI chips process data and execute calculations, and if a challenger emerges that does so more efficiently, more cheaply, or both, then it will legitimately threaten the current paradigm.

History says a new (or existing) entrant will eventually disrupt Nvidia’s monopoly, but projecting when that might occur is no easy task. Some of the companies that are currently working on competing AI chips/platforms include Alphabet (GOOGL), Amazon (AMZN), Apple (AAPL), BrainChip (BRCHF), Cerebras, Graphcore, IBM (IBM), Intel (INTC) and Mythic.

Back in May, Nvidia’s CEO—Jensen Huang—noted that Nvidia will need to keep innovating to maintain its current edge in the market saying, “We have competition from every direction.”

Nvidia, of course, isn’t sitting on its hands. The company recently announced that the next generation of its popular H100 GPU will be available next year. The upgraded GPU—known as the GH200—is expected to debut in the second quarter of 2024.

At present, nobody else in the market can compete with the promise of the GH200. And that market reality should translate to increased sales and profitability at a company that’s already valued at more than a trillion dollars.

Nvidia has seemingly cornered the market not only in AI-specific chips, but also in momentum, as evidenced by the year-to-date performance in the company’s underlying shares.

But one day in the future, that momentum will shift—whether that’ll be next week, next month or next year is anyone’s guess.

To learn more about trading an earnings event, check out this recent episode of Market Measureson the tastylive financial network. To follow everything moving the markets on a daily basis, tune into tastylive—weekdays from 7 a.m. to 4 p.m. CDT.

For daily financial market news and commentary, visit the News & Insights page at tastylive or the YouTube channels tastylive(for options traders), and tastyliveTrending for stocks, futures, forex & macro.

Trade with a better broker, open a tastytrade account today. tastylive, Inc. and tastytrade, Inc. are separate but affiliated companies.

Andrew Prochnow has more than 15 years of experience trading the global financial markets, including 10 years as a professional options trader. Andrew is a frequent contributor Luckbox magazine.